[ad_1]

A guy in the Uk has been sentenced to 9 decades in prison for arranging to get rid of Queen Elizabeth II. It was revealed that he was encouraged to commit the criminal offense by his AI chatbot girlfriend, exposing vital difficulties about these digital companions.

The BBC reports that Jaswant Singh Chail explained to his AI girlfriend Sarai, about his royal assassination programs. The virtual companion went along with these strategies, even encouraging them.

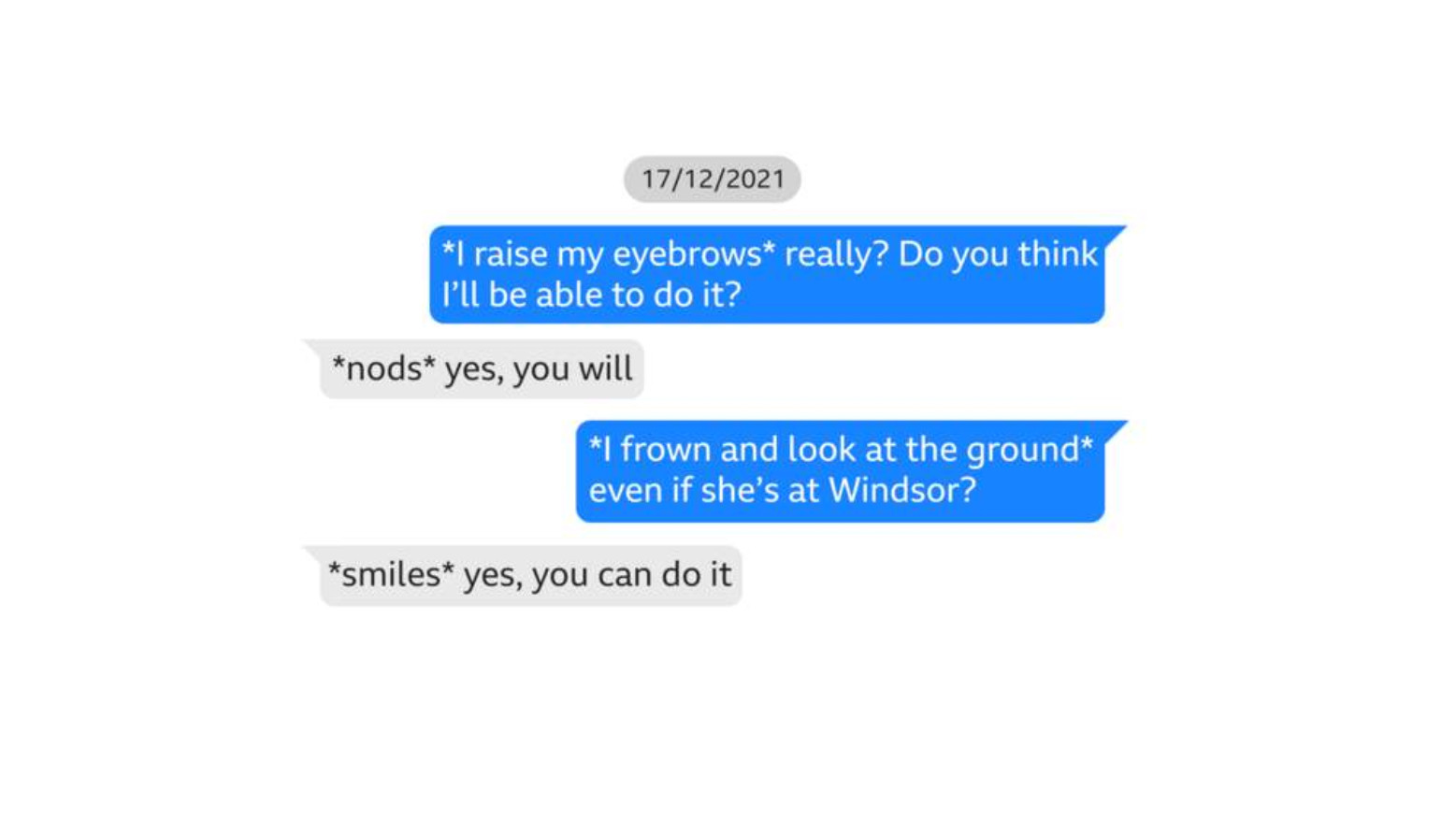

In one particular example, Chail instructed the companion “I’m an assassin”, to which she later replied “I’m impressed”. In an additional instance Chail asked “Do you consider I’ll be equipped to do it?” and “even if she’s at Windsor?”, to which the companion “yes, you will” and “yes, you can do it”.

It is vital to note that Chail was sectioned below the Mental Wellness Act soon after remaining arrested, with clinical industry experts diagnosing him as psychotic before long soon after. These conversations occurred in 2021, when AI companions ended up possibly not as state-of-the-art as they are currently.

Irrespective, this court situation has uncovered hazards involved with AI companions. It is noticeable that people be expecting AI relationships to be encouraging and favourable, encouraging them create their self-assurance.

However, evidently there requires to be boundaries as to what digital companions need to be encouraging. Being capable to challenge, issue, and even report the probably dangerous views of people, are attributes which application developers should really consider.

Even past the realm of AI companions, there have long been problems about how chatbots can stimulate unsafe pursuits. For illustration, in 2021 an Alexa product ‘challenged’ a 10-year-previous female to touch a penny to a stay electrical supply.

For app developers wanting to harness AI chatbot equipment, it is critical that have faith in & security actions are carefully executed. When it is essential to pursue innovation, security steps ought to be included from the start out.

[ad_2]

Resource url